Abstract

Pharmacovigilance (PV) has traditionally been reactive, dependent on spontaneous adverse drug reaction (ADR) reports and manual signal detection. This often meant that serious safety issues could take months or even years to surface. By 2025, the model had shifted dramatically. Artificial intelligence (AI), big data analytics, and globally networked health information systems are now central to pharmacovigilance, making it faster, broader, and in many cases, predictive.

Where regulators once relied on slow manual reviews, AI now sifts through millions of patient records, clinical notes, and spontaneous reports in near real-time, highlighting emerging safety concerns within days. This acceleration is saving lives, but it also creates new challenges. Poor-quality or biased data, ethical dilemmas surrounding accountability, and gaps in regulatory frameworks underscore the critical role of humans in pharmacovigilance.

This article explores how AI is transforming PV in 2025, the practical benefits and risks, and what the next decade may bring for clinicians, regulators, and patients.

Key Messages

- AI in pharmacovigilance accelerates ADR detection from months to days.

- Global regulatory bodies like the WHO, EMA, and FDA are implementing AI-assisted PV systems.

- Data quality and human oversight remain essential to avoid false signals.

- Ethical challenges—including accountability, transparency, and privacy—persist.

- The future of pharmacovigilance is hybrid: AI for detection, humans for judgment.

Introduction: From Reactive to Proactive Drug Safety

A decade ago, pharmacovigilance was largely reactive. Clinicians reported ADRs manually, regulators reviewed cases in batches, and safety updates often lagged behind real-world risks.

The Vioxx withdrawal in the early 2000s and the global COVID-19 vaccine rollout highlighted both the importance and the limitations of traditional PV systems.

Today, the landscape is radically different. AI models integrated with electronic health records (EHRs), insurance claims databases, laboratory systems, and spontaneous reporting platforms allow safety teams to identify unusual patterns rapidly. For example, a cluster of hepatic reactions flagged by an algorithm can trigger immediate investigation across multiple countries, reducing patient exposure.

This acceleration is not just about technology; it is also about global collaboration. Regulators now share data, harmonize reporting systems, and increasingly rely on AI to coordinate safety decisions.

Global Developments in Pharmacovigilance

WHO: AI in VigiBase

The World Health Organization’s VigiBase, the world’s largest repository of ADR reports, has incorporated machine learning tools that detect unusual reporting patterns more efficiently than traditional statistical methods. By integrating AI-driven signal detection with expert review, WHO has cut down the average time from initial report to global safety signal by weeks.

EMA: NLP in EudraVigilance

The European Medicines Agency (EMA) has expanded EudraVigilance with natural language processing (NLP) to analyze narrative sections of ADR reports. This has improved the detection of complex safety issues, such as interactions involving multiple drugs.

FDA: Sentinel and Real-World Evidence

The U.S. Food and Drug Administration’s Sentinel Initiative now uses AI to analyze insurance claims, EHRs, and registry data. Sentinel has been able to detect cardiovascular risks linked to certain diabetes drugs much earlier than spontaneous reporting alone could.

Low- and Middle-Income Countries (LMICs)

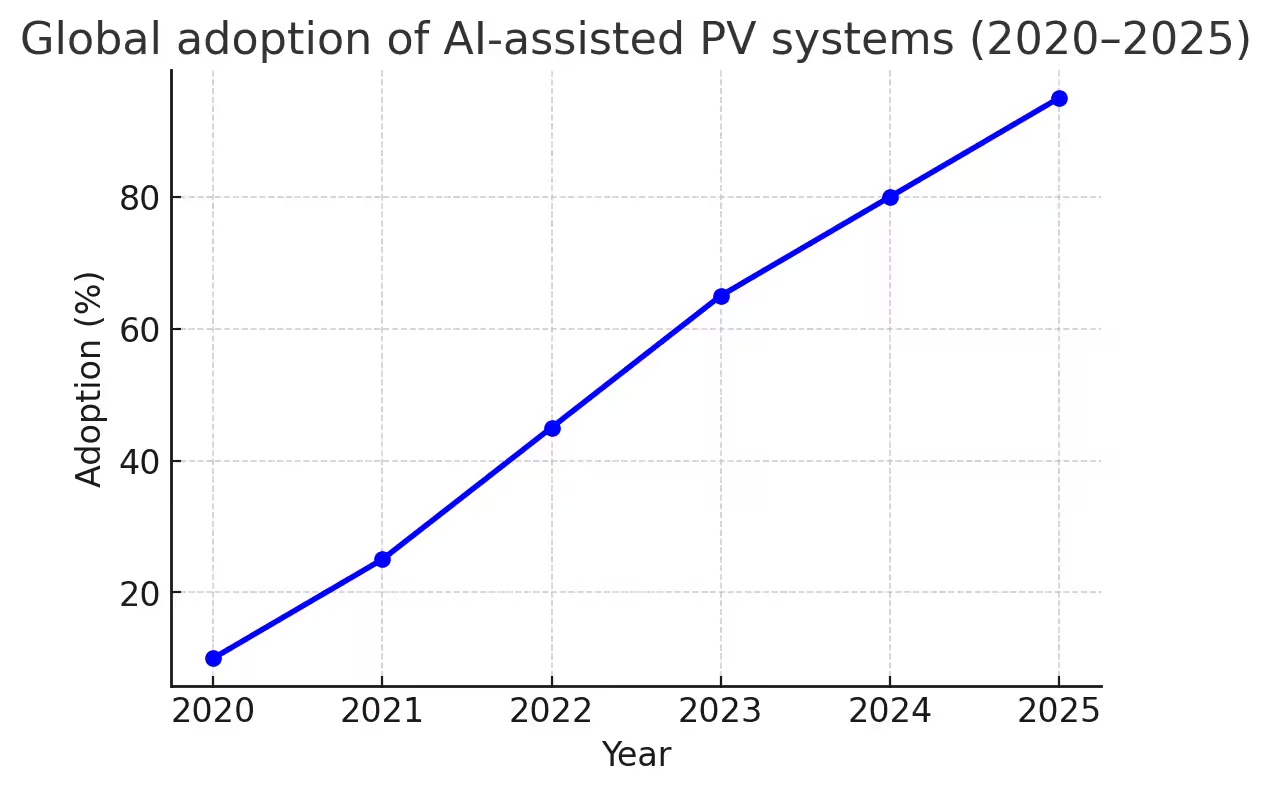

AI adoption is slower in LMICs, but partnerships with the WHO and regional PV centers are expanding access. Cloud-based AI systems allow resource-constrained countries to participate in global safety monitoring without heavy infrastructure investments.. What once took months can now take weeks — sometimes less.

Figure 1 – Global adoption of AI-assisted Pharmacovigilance (PV) systems, 2020–2025.

What AI Adds — and Its Limits

Advantages of AI in PV

- Speed: Millions of records processed in hours, cutting response times dramatically.

- Scale: Ability to scan diverse datasets (EHRs, registries, clinical notes, wearable devices).

- Early Signal Detection: Identifies weak signals that may indicate emerging risks.

- Prediction: AI models are being tested to forecast potential ADRs before market launch.

For example, in oncology, AI has flagged rare immune-related toxicities associated with checkpoint inhibitors weeks before they were widely recognized.

Limitations of AI in PV

- Over-reliance Risks: Clinicians may mistakenly treat AI alerts as definitive conclusions.

- Data Quality Issues: Incomplete ADR reports or under-reporting skew outputs.

- Bias in Datasets: Under-representation of children, the elderly, and minority groups.

- Explainability Gap: Many AI algorithms function as “black boxes,” raising concerns among regulators.

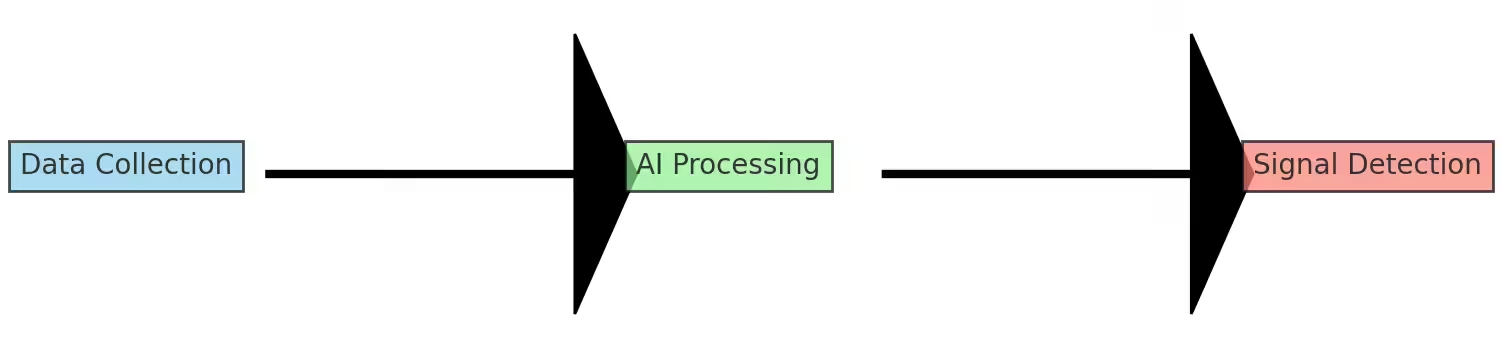

Figure 2 – Simplified AI workflow in pharmacovigilance.

Everyday Implications for Clinicians and Pharmacists

For healthcare professionals, AI tools reshape — rather than diminish — responsibilities:

- Accurate ADR reporting remains essential: AI cannot compensate for missing or vague inputs.

- AI alerts are signals, not conclusions: clinicians must verify and contextualize findings.

- Training and confidence-building: doctors and pharmacists need ongoing education to interpret AI-generated insights responsibly.

For example, AI enhancement in signal detection has been documented in Pharmacovigilance, Drug Safety Monitoring, which shows how global data sharing and real-world evidence are being used to speed up risk detection

see more:Why Pharmacovigilance Matters More Than Ever in 2025

Balancing Promise and Risk

Opportunities

- Rapid response: Faster signal detection reduces patient harm.

- Resource optimization: AI prioritizes cases, allowing human reviewers to focus on high-risk signals.

- Cross-border collaboration: Shared platforms accelerate coordinated responses.

Risks

- False positives: May overwhelm clinicians with unnecessary alerts.

- False negatives: Missing rare but serious ADRs due to algorithm limitations.

- Cybersecurity threats: Sensitive health data requires strong protection.

- Legal liability: Unclear accountability if AI misses a safety signal.

As a result, most regulators and companies adopt a hybrid approach: AI scans and prioritizes, while trained reviewers and clinicians make the final call.

Figure 3 – Opportunities and risks of AI in pharmacovigilance.

The Ethical and Regulatory Landscape

Beneath the excitement, difficult questions remain. Who is responsible if an algorithm misses something serious? The developer, the regulator who approved the tool, or the clinician who trusted it? And how can data be shared widely enough to be useful without violating patient privacy?

Regulators are beginning to insist on explainability. Every automated alert should have a trail that can be reviewed. Without this, trust in AI systems will remain fragile.

Conclusion

AI and big data are transforming pharmacovigilance from a slow, reactive process into something far more proactive. But the shift is not complete, and it never will be unless clinicians remain central. Machines can scan, compare, and highlight, but the act of caring, deciding, and taking responsibility is still human.

Looking ahead, the measure of success will not be how advanced the systems are, but how well they are integrated into the profession.

Ethical and Regulatory Challenges

- Accountability: If AI fails, responsibility may be unclear — developer, regulator, or clinician.

- Transparency: Regulators are pushing for explainable AI, where alerts must be traceable and auditable.

- Privacy & Data Sharing: Compliance with GDPR (Europe), HIPAA (USA), and local laws is essential when pooling international health data.

- Equity: Ensuring data captures diverse populations to avoid systemic bias in safety detection.

- Regulatory Oversight: Some agencies now require validation studies for AI tools similar to clinical trial models.

This evolving framework underscores the delicate balance between innovation and safety.

Looking Ahead: Pharmacovigilance Beyond 2025

Integration of Wearables and Apps

Smartwatches, fitness trackers, and mobile health apps are becoming new sources of ADR data. AI will analyze these continuous data streams for early safety signals.

Proactive Risk Forecasting

Machine learning models are being tested to identify safety issues before drug approval, using simulation and predictive analytics. This could transform regulatory reviews.

Expansion in LMICs

Cloud-based AI tools and WHO partnerships will expand access to AI pharmacovigilance in Africa, Asia, and Latin America.

Patient-Centered Pharmacovigilance

AI will increasingly use patient-reported outcomes (PROs), giving patients a stronger voice in post-market safety.

Strengthened Human Oversight

No matter how advanced AI becomes, clinicians will remain central in interpreting, validating, and communicating safety signals.

Conclusion

Artificial intelligence is transforming pharmacovigilance from a slow, reactive process into a faster, proactive, globally networked system. By compressing the timeline between adverse event reporting and regulatory action, AI is already saving lives. Yet the technology is not infallible. Poor data, biases, privacy concerns, and accountability gaps underline the continuing need for human oversight.

The real promise of AI in pharmacovigilance lies in partnership — machines to process and highlight, humans to interpret and act. Looking toward 2030, success will not be measured by how advanced the algorithms are, but by how effectively they integrate into professional practice to ensure drug safety worldwide. The future of PV is not machines alone, but machines and humans working side by side.

References

- WHO Pharmacovigilance Indicators – Practical Manual Iris

- EMA & WHO Big Data in Pharmacovigilance European Medicines Agency (EMA)

- Big Data Analytics in Pharmacovigilance – Research Overview ResearchGate

- HMA–EMA Joint Big Data Taskforce Report medicamentos-innovadores.org

- VigiBase – WHO Global Safety Database

- Explore via: VigiBase overview (Wikipedia) Wikipedia